¶ Trigger Built-in Functions

There are a number of helper functions and classes available to provide useful functions for interacting with the IoT Bridge, decoding and encoding data, and converting different formats.

¶ API Loopback

The following functions can be used to enable a trigger to make API calls to the IoT Bridge HTTPS API to enable automation of key onboarding tasks with triggers. Refer to the API documentation for details about specific API calls.

Triggers using the

api.xxx()functions operate as administrators within your account, care should be taken to ensure that you do not damage the data in your account.

// list all services and print the service names

var rsp = api.get('/api/v1/service')

if(rsp.status==200) {

rsp.body.forEach(function(svc) {

log.trace('service: ' + svc.name)

))

}

// list all services whose name includes "mqtt"

var rsp = api.get('/api/v1/service',{search: 'name==contains("mqtt")'})

if(rsp.status==200) {

rsp.body.forEach(function(svc) {

log.trace('service: ' + svc.name)

))

}

// create a new instance of the cron service

var rsp = api.post('/api/v1/service',nil, {

name: 'myservice',

key: 'myservice',

modelId: '81f12e7e5e3fe2ad1d04bf17',

params: {

enabled: true,

cron: '0 * * * *'

}

})

// delete a service

var rsp = api.delete('/api/v1/service/'+'81f12e7e5e3fe2ad1d04bf17')

These functions are intended to expose the API for provisioning and commissioning use cases, the intent is not to use these functions "every time a device reports", if such a use case exists, please open a support ticket to find alternative designs.

¶ Conversion

The following functions assist with converting string representations of binary data to byte arrays for easy manipulation in the trigger.

// convert base64 endocded string to byte[]

var bytes = convert.b64ToBin('aGVsbG8gd29ybGQ=')

// convert byte[] to base64 encoded string

var str = convert.binToB64([0x01, 0x02, 0x03])

// convert base64 encoded string to hex encoded string

var hex = convert.b64ToHex('aGVsbG8gd29ybGQ=')

// convert hex encoded string to base64 encoded string

var b64 = convert.hexToB64('010203')

// convert hex encoded string to byte[]

var bytes = convert.hexToBin('010203')

// convert byte[] to hex encoded string

var str = convert.binToHex([0x01, 0x02, 0x03])

// convert CBOR byte[] to javascript objects

var obj = convert.cborToObject( [0x84, 0x18, 0xFB, 0x19, 0x01 0x07, 0x19, 0x01, 0x03, 0x19, 0x01, 0x05])

// obj will be [251, 263, 259, 261]

¶ Binary readers

There are two readers available for processing binary payloads, a binReader and a bitReader. As their names imply, the bitReader is designed for complex bit-packed payloads where data is packed at arbitrary bit offsets within a byte array. The byteReader is designed for byte-oriented data frames where all fields are aligned on a byte boundary. While there is an overlap in functionality, the byteReader has significantly faster performance since it operates only at the byte level, so it should be used if all data fields align on byte boundaries.

¶ Byte Reader

The byte reader allows for rapid decoding of binary data that is generally byte-aligned. The binary reader support "streaming" style parsing, where reads begin at offset 0 in the buffer, and each read increments the current value in the stream.

¶ Notes

- The

skip()method allows skipping ahead (positive value) or backwards (negative value) in the stream. - The

seek()method allows changing the location of the cursor in the stream. - The default byte ordering is big-endian.

- The

skip(),seek(),big(), andlittle()methods support chaining so they can be combined with read method calls. - The

status()method returns the index, length, and buffer for the reader. Note the buffer is an array of bytes, but when printed in logs may be base64 encoded.

¶ Samples

// create a new byte reader assuming little endian byte ordering (default is big).

var reader = convert.binReader(event.data.payload, {endian: 'little'})

// read an unsigned 8, 16, or 32 bit integer

var num = reader.readUint8()

var num = reader.readUint16()

var num = reader.readUint32()

// read a signed 8, 16, or 32 bit integer

var num = reader.readInt8()

var num = reader.readInt16()

var num = reader.readInt32()

// read a 32 or 64 bit floating value

var f = reader.readFloat32()

var f = reader.readFloat64()

// read a 10 byte string

var s = reader.readString(10)

// read a string until the end of the buffer

var s = reader.readString(-1)

// read 10 bytes, and format the output as a hex encoded string

var s = reader.readBytes(10, 'hex')

// read 10 bytes, and format the output as a base64 encoded string

var s = reader.readBytes(10, 'base64')

// read 10 bytes, and output the bytes as an array of bytes

var arr = reader.readBytes(10, 'binary')

var arr = reader.readBytes(10)

// skip 1 byte and advance the cursor

reader.skip(1)

// skip 1 byte, and read a 1 byte integer in a single statement

var num = reader.skip(1).readInt8()

// read a 4 byte value as bytes, then skip backwards and re-read it as a number.

// bytes = reader.readBytes(4)

// asNum = reader.skip(-4).readInt32()

// reset the reader to the first byte in the buffer to re-read it

reader.seek(0)

// get the status of the read buffer

var st = reader.status()

st = { index: 17, length: 25, buffer: [0x01, 0x02...]}

// set the reader into little endian mode

reader.little()

// set the reader into big endian mode

reader.big()

¶ Bit Reader

The bit reader allows for rapid decoding of binary data that is generally bit-aligned. The bit reader support "streaming" style parsing, where reads begin at offset 0 in the buffer, and each read increments the current value in the stream.

¶ Notes

- The

skip()method allows skipping ahead (positive value) or backwards (negative value) in the stream. - The

seek()method allows changing the location of the cursor in the stream. - The

ignoreIf()method allows for dynamic ignoring of subsequent parser calls for data streams with dynamic contents. - The default byte ordering is big-endian.

- The

skip(),seek(),big(), andlittle()methods support chaining so they can be combined with read method calls. - The

status()method returns the index, length, and buffer for the reader. Note the buffer is an array of bytes, but when printed in logs may be base64 encoded.

¶ Samples

// create a new bit reader assuming little endian byte ordering (default is big).

var reader = convert.bitReader(event.data.payload, {endian: 'little'})

// readInt(bits) reads the number of bits as a signed integer

var num = reader.readInt(4)

var num = reader.readInt(8)

// readUint(bits) reads the number of bits as an unsigned integer

var num = reader.readUint(4)

var num = reader.readUint(8)

// readBool() reads the next bit as a boolean value

var bool = reader.readBool()

// readString(bits) reads the number of bits as a string, must be a multiple of 8

var str = reader.readString(64)

// readBits(bits, format) reads the number of bits, and formats the bit array in different ways

var bytes = reader.readBits(9, 'binary') // reads the bits and returns a byte array

var hex = reader.readBits(9, 'hex') // reads the bits and returned a hex encoded string

var base64 = reader.readBits(9, 'base64') // reads the bits and returns a base64 encoded string

// skip 1 bit and advance the cursor

reader.skip(1)

// skip 1 bit, and read a 1 byte integer in a single statement

var num = reader.skip(1).readInt(8)

// read a 4 bit value as bytes, then skip backwards and re-read it as a number.

// bytes = reader.readBits(4, 'binary')

// asNum = reader.skip(-4).readInt(4)

// reset the reader to the first bit in the buffer to re-read it

reader.seek(0)

// get the status of the read buffer, the indicies are the bit numbers.

var st = reader.status()

st = { index: 17, length: 25, buffer: [0x01, 0x02...]}

// ignoreIf(check, ignoreNext) check is a boolean input, if true, then the next 'ignoreNext' reader methods will be ignored.

// Useful for dynamic parsing of data if the values indicate that data is present or not.

reader.ignoreIf(dataPresent,3)

// set the reader into little endian mode

reader.little()

// set the reader into big endian mode

reader.big()

¶ Binary writers

A binWriter is available to quickly build binary packets for sending to devices using custom protocol encodings. The binWriter is designed for byte-oriented data frames where all fields are aligned on a byte boundary.

¶ Byte Writer

The byte writer allows for rapid encoding of binary data that is generally byte-aligned. The binary writer support "streaming" style building, where writes begin at offset 0 in the buffer, and each write appends to the current value in the stream.

¶ Notes

- The

skip()method allows skipping ahead (positive value) or backwards (negative value) in the stream. - The

seek()method allows changing the location of the cursor in the stream. - The default byte ordering is big-endian.

- The

skip(),seek(),big(), andlittle()methods support chaining so they can be combined with read method calls. - The

status()method returns the index, length, and buffer for the reader. Note the buffer is an array of bytes, but when printed in logs may be base64 encoded.

¶ Samples

// create a new byte reader assuming little endian byte ordering (default is big).

var writer = convert.binWriter({endian: 'little'})

// write an unsigned 8, 16, or 32 bit integer

writer.writeUint8(5)

writer.writeUint16(1250)

writer.writeUint32(928304)

// write a signed 8, 16, or 32 bit integer

writer.writeInt8(-5)

writer.writeInt16(-1250)

writer.writeInt32(-928304)

// write a 32 or 64 bit floating value

writer.writeFloat32(3.14)

writer.writeFloat64(3.14159)

// write a string, string will not be null terminated, optional length parameter to specify any null padding after the last character.

writer.writeString('hello', 10)

writer.writeString('hello')

// write 3 bytes from a binary input

writer.writeBytes([0x01,0x02,0x03], 'binary')

// write 3 bytes from a hex encoded string input

writer.writeBytes('010203', 'hex')

// write 3 bytes from a base64 encoded string input

writer.writeBytes('AQID', 'base64')

// write 8 bytes, and fill any space after the buffer with nulls

writer.writeBytes('010203', 'hex', 8)

// write a date from an rfc3339 string as a 4 byte unix timestamp.

writer.writeDate('2025-08-09T12:33:44Z','unix')

// write a date from a Date() object as a 4 byte unix timestamp.

writer.writeDate(new Date(),'unix')

// write a date as an ISO8601 formatted string.

writer.writeDate(new Date(),'iso8601')

// chaining writes

writer.writeUint8(1).writeString('helloworld')

// get the status of the read buffer

var st = writer.status()

st = { index: 17, length: 25, buffer: [0x01, 0x02...]}

// set the reader into little endian mode

reader.little()

// set the reader into big endian mode

reader.big()

// get the final byte buffer as a byte array

var bytes = writer.getBytes('binary')

// get the final byte buffer as a hex encoded string

var hex = writer.getBytes('hex')

// get the final byte buffer as a base64 encoded string

var b64 = writer.getBytes('base64')

¶ Protobuf conversion

The following functions provide encoding and decoding of Google Protobuf buffers.

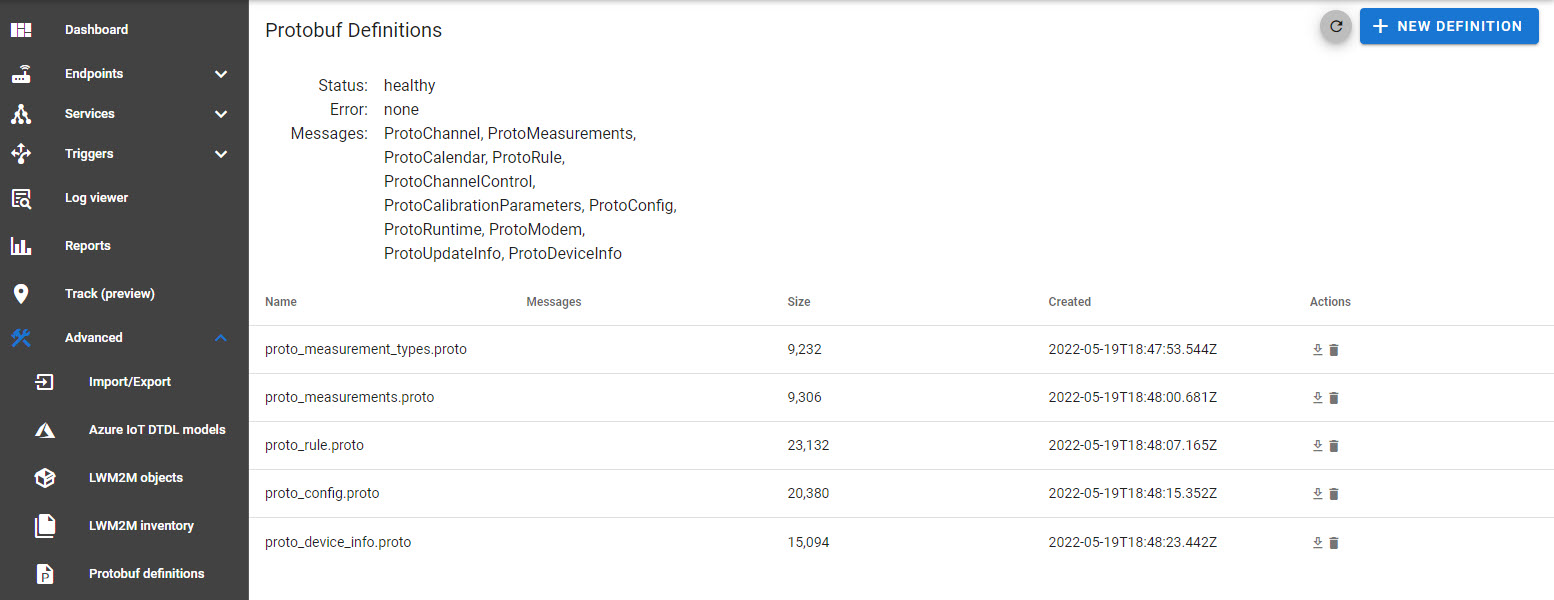

¶ Uploading your .proto files

Before you can encode or decode protobuf messages, you need to upload your .proto files to the IoT Bridge. You will find the definitions by navigating to Advanced -> Protobuf Definitions.

- Add new definitions by clicking New definition and selecting the file to upload. If your .proto files have dependencies, you may see an error until you have imported all of the required files.

- Note the Messages field, as you will need the message type name when encoding or decoding a buffer.

¶ Using the protobuf functions

// convert a binary protobuf message (in 'bytes' variable) to an object

var obj = convert.protobufToObject(bytes, 'ProtoMeasurements')

// convert a binary protobuf message (in 'bytes' variable) to an object

var obj = convert.protobufToObject(bytes, 'ProtoMeasurements', {origName: true, emitDefaults: true})

// convert an object to a binary message

var bytes = convert.objectToProtobuf({serialNum:[0x01, 0x02], ,,,})

- convert.protobufToObject(bytes, messageType[, options])

- bytes: An array of bytes to be decoded.

- messageType: The message type to be decoded.

- options: Optional options object to control decoding.

- origName: Boolean if the original variable name should be emitted, otherwise the output will be in camelCase.

- emitDefaults: If true, then boolean false and numerical 0's will be emitted, otherwise they will not.

- convert.objectToProtobuf(object, messageType)

- object: A javascript object to be encoded to protobuf.

- messageType: The message type to be encoded.

¶ Cache

The following functions provide access to a high-speed in-memory cache for storing information between requests. The maximum lifetime of a record in the cache is 86400 seconds (1 day).

// set an item in the cache with 1 hour TTL

cache.set('mykey', 'myvalue', 3600)

// get an item from the cache

var value = cache.get('mykey')

// check if the item was returned

if(value) {

//process data

}

// delete an item from the cache

cache.del('mykey')

¶ Date

The date class enables quick parsing and formatting of timestamps, this is particularly useful when processing data from different services that report time in non-standard formats.

The reference time used in the format is the specific time:

Mon Jan 2 15:04:05 MST 2006

which is Unix time 1136239445. Since MST is GMT-0700, the reference time can be thought of as

01/02 03:04:05PM '06 -0700

To define your own format, write down what the reference time would look like formatted your way. The model is to demonstrate what the reference time looks like so that the Format and Parse methods can apply the same transformation to a general time value.

Within the format string, an underscore _ represents a space that may be replaced by a digit if the following number (a day) has two digits; for compatibility with fixed-width Unix time formats.

A decimal point followed by one or more zeros represents a fractional second, printed to the given number of decimal places. A decimal point followed by one or more nines represents a fractional second, printed to the given number of decimal places, with trailing zeros removed. When parsing (only), the input may contain a fractional second field immediately after the seconds field, even if the layout does not signify its presence. In that case a decimal point followed by a maximal series of digits is parsed as a fractional second.

The underlying functionality comes from Golang's time.Parse() and time.Format() commands, and detailed documentation can be found here: https://golang.org/pkg/time/

Several pre-defined formats are available:

- rfc3339 - 2006-01-02T15:04:05Z

- iso8601 - 2006-01-02T15:04:05Z

- rfc1123 - Mon, 02 Jan 2006 15:04:05 MST

- rfc1123z - Mon, 02 Jan 2006 15:04:05 -0700

// parse a timestamp string into a Date() object

var d = date.parse('2021/01/30 12:54:22', '2006/01/02 15:04:05')

log.user(d.toUTCString())

// format a Date() object according to the rfc1123 format

var str = date.format(d, 'rfc1123')

// format a Date() object according to rfc3339 and shift it to the appropriate timezone. Assumes "d" is in UTC.

var str = date.format(d, 'rfc3339', 'America/New_York')

// convert a timestamp in one format to another format in one function

// destTimestamp = date.convert( sourceTimestamp, sourceFormat, destFormat)

var str = date.convert('2021/01/30 12:54:22', '2006/01/02 15:04:05', 'rfc3339')

¶ Email functions

Simple emails can be sent from the platform, this is intended for monitoring and alerting, not for "end customer" communications. All emails are branded for the platform and sent from the platform's email address.

var obj = {

to: ['support@tartabit.com'],

subject: 'My subject',

title: 'My title',

message: 'Here is the message to send, it can contain template {{.Params.Value}} params.',

params: {

Value: 'my value'

},

tables: [{

title: 'my table',

headers: [ 'col1', 'col2', 'col3'],

columns: [ 'c1', 'c2', 'c3'],

rows: [

{ c1: 'abc', c2: 'def', c3: 123},

{ c1: 'xyz', c2: 'pqr', c3: 456}

]

}]

}

email.send(obj)

- email.send(mailObj)

- mailObj: An object with the details of the mail message to send.

- to: Array of email addresses to send to.

- subject: Subject of the email.

- title: Title at the top of the email message.

- message: The message body of the email, it can use Golang's template format to facilitate dynamic content generation and easier manipulation of data structures.

- params: Parameters to pass into the message template that will be replaced by the runtime engine. In the example above, you can use {{ .Params.Value }} in your email message.

- tables: Array of objects containing table information to include in the email.

- title: Title for the table.

- headers: Array of strings for the column headers.

- columns: Array of keys to use to extract the columns from the row objects.

- rows: Array of objects for each row, keys must match columns from above.

- mailObj: An object with the details of the mail message to send.

¶ Email templating

The templating allows for substituting values at runtime instead of building a large string in a trigger and sending directly. Below are the variable available in the template:

- {{.Title}}: Title of the email.

- {{.Params}}: Any custom parameters passed into the mail template.

¶ Endpoint functions

Update an endpoint based on dynamic data.

¶ endpoint.create(serviceKey, endpointKey, params, tags)

Create an endpoint, returns null on success, or a string with the error on failure.

// create an endpoint if it doesn't exist, and assign a tag 'new' to 'true'

var rslt = endpoint.create('myservicekey', 'myendpointkey', { user: 'user1', pass: 'pass1'}, {new: 'true'})

if(rslt==null) {

log.trace('success')

} else {

log.trace('failure: '+rslt)

}

- serviceKey: The service to attach the endpoint to.

- endpointKey: The endpoint key to create, if an endpoint with this key already exists, this function is a NO-OP.

- params: Update configuration parameters for the endpoint, parameters are endpoint specific and you should refer to an export of your endpoints for possible values.

- tags: Key/value pairs, the value must be a string or a primitive convertible to a string value.

¶ endpoint.update(endpointKey, changes)

Update an endpoint, returns null on success, or a string with the error on failure.

// update the configuration of an endpoint, the following fields can be updated:

// name, key, secondsBeforeMissing, params.{paramName}

endpoint.update('myendpointkey', { name: 'new name', params: { timeout: 30 } })

// update an endpoint with a statusDetails 'iccid' field with the ICCID of the endpoint.

endpoint.update('myendpointkey', { statusDetails: { iccid: '8901393541912401' } })

- endpointKey: The endpoint key to update.

- change: An object with the fields to be updated.

- name: Update the name of the endpoint.

- key: Update the key of the endpoint.

- secondsBeforeMissing: Update the number of seconds of inactivity before the endpoint is declared missing.

- params: Update configuration parameters for the endpoint, parameters are endpoint specific and you should refer to an export of your endpoints for possible values.

- statusDetails: Object with key/value pairs to set in the status details. The values must be strings or primitive values.

- addTags: Functions similar to the

updateTags()method. - removeTags: Functions similar to the

updateTags()method.

¶ endpoint.updateTags(endpointKey, addTags, removeTags)

Update the tags on an endpoint. Note that updates do not take effect immediately.

// update tags, by adding/removing tags from an endpoint

endpoint.updateTags('myendpointkey', {'newtag':'value123'})

// remove tag 'newtag' from the defined endpoint

endpoint.updateTags('myendpointkey', null, ['newtag'])

- endpointKey: The endpoint key to update.

- addTags: Object with key/value pairs, the values must all be strings. Existing tags with the defined keys will be updated, and the tag will be added if it does not already exist.

- removeTags: Array of strings with a list of tags to remove.

¶ endpoint.delete(endpointKey)

Delete an endpoint, returns null on success, or a string with the error on failure.

endpoint.delete('myendpointkey')

- endpointKey: The endpoint key to delete.

¶ endpoint.searchOne(query)

Find a single endpoint and return it. This function always returns an object, and will have an error field with any failure during the request.

var rslt = endpoint.searchOne('key=="mykey"')

if(rslt.error) {

// failed

} else {

// process here

}

- query: A valid query string to find an endpoint.

¶ endpoint.search(query, callback(ep))

Search endpoints and call the callback for each endpoint found. Returns null on success, or a string with the error on failure.

endpoint.search('status==connected && tags.server==production', function(ep) {

log.trace('found ' + ep.name)

})

- query: A valid query string to find an endpoint.

¶ endpoint.storageGet(endpointKey, storageKey)

Get an endpoint storage object. Throws an exception if the object doesn't exist.

// get an endpoint storeage object

try {

var obj = endpoint.storageGet('myendpointkey', 'mystoragekey')

} catch(e) {

log.trace('failed: '+e)

}

- endpointKey: The endpoint key to find.

- storageKey: The storage key to find.

¶ endpoint.storageSet(endpointKey, storageKey, obj)

Set an endpoint storage object. Throws an exception if there is an error.

// store an endpoint storeage object

try {

endpoint.storageSet('myendpointkey', 'mystoragekey', {field1: 'abc', field2: 'def'})

} catch(e) {

log.trace('failed: '+e)

}

- endpointKey: The endpoint key to find.

- storageKey: The storage key to update.

- obj: The object to store, must be a plain javascript object.

¶ endpoint.storageDelete(endpointKey, storageKey)

Delete an endpoint storage object from an endpoint. Returns silently if the storageKey does not exist.

// get an endpoint storeage object

try {

endpoint.storageDelete('myendpointkey', 'mystoragekey')

} catch(e) {

log.trace('failed: '+e)

}

- endpointKey: The endpoint key to find.

- storageKey: The storage key to delete.

¶ Exec functions

Functions for generating events to propogate data to a pipeline of triggers. Sometimes you don't want to process all of your data in a single trigger, or you may wish to have multiple devices process data in a common way, the exec functions can help in this regard.

- Generic events will inherit the

serviceandendpointvalues from the calling trigger, this allows you to easily access the metadata from the event.

¶ exec.now(key, data, [options])

Asynchronously execute a generic event. The calling trigger does not get any feedback regarding the execution.

// generate a "generic" event called "myevent" with data.

exec.now('myevent', {deviceId: 'mydevice', temp: 35.2})

// generate an event, and override the endpoint to a new endpoint. This is useful when the source event does not have an endpoint, and the endpoint key is derived when decoding the data.

exec.now('myevent', {deviceId: 'mydevice', temp: 35.2}, {endpointkey:'mydevice'})

- key:: The generic key to use when generating the event.

- data:: An object to pass in the "data" field of the event.

- options: Options for advanced configuration.

- endpointKey: Override the default endpoint key, or set an endpoint if one does not exist. This will update the

event.endpointvariable in the new event. - apptoken: Make the execution a cross-account event, by using an appToken from another account, the event will be generated in the target account. While the endpoint and service will be copied in the event, the endpoint and service themselves are not available to the target account for operations like

endpoint.update().

- endpointKey: Override the default endpoint key, or set an endpoint if one does not exist. This will update the

¶ exec.sync(key, data, [timeout], [options])

Synchronously execute a generic event and wait for the reply. Note that exec.sync() does not support cross-organization calls.

// make a synchronous call to another trigger

// calling trigger

var ret = exec.sync('myfn', {input:'data'})

log.trace('data returned', ret.returndata)

// call a synchronous function with a 45000 millisecond timeout

var ret = exec.sync('myfn', {input:'data'}, 45000)

log.trace('data returned', ret.returndata)

// the trigger that implements the event with event type "Generic" and key "myfn" must call "trigger.reply()" to send the reply back to the calling trigger.

trigger.reply({returndata:'my return data'})

- key:: The generic key to use when generating the event.

- data:: An object to pass in the "data" field of the event.

- timeout: The number of milliseconds to wait for a reply, default is 5000. While there is no inherent maximum, it is highly recommended to use a value less than 300000 (5 minutes).

- options: Options for advanced configuration.

- endpointKey: Override the default endpoint key, or set an endpoint if one does not exist. This will update the

event.endpointvariable in the new event.

- endpointKey: Override the default endpoint key, or set an endpoint if one does not exist. This will update the

¶ Geospatial functions

Helper functions for geospatial operations

// generate a geohash based on latitude and longitude

var geohash = geospatial.geohash(45.123,-80.1234)

// calculate the 2D distance between two coordinates

var dist = geospatial.geodist(45.123,-80.1234, 45.353,-80.220)

¶ Hash functions

Generate hashes from variables

// generate an IEEE CRC32 hash, result will be an unsigned 32 bit integer

var sum = hash.crc32([0x01, 0x53, 0x22])

// generate an ISO CRC64 hash, return will be an unisgned 64 bit integer

var sum = hash.crc64('my string value')

// generate an ISO CRC64 hash, return will be a SIGNED 64 bit integer.

// objects will be formatted as JSON prior to hashing, note that the order of parameters may change, which could result in hashes of the same object being different.

var sum = hash.crc64signed({a: 123, b: 'def'})

// generate a SHA256 32 byte hash of the data. Result will be a hex encoded string.

var sum = hash.sha256({a: 123, b: 'def'})

¶ History functions

Find records in the Event History. For example, if you receive an "ignition off" event, you can lookup the previous "ignition on" event to calculate the duration the ignition was on.

// history.one(params) The parameters define how to find a record, returns null if not found.

var rec = history.one(params)

// history.search(search, callback) Search is a valid search string (that can be used in the Event viewer), and the callback is called for each matching event.

function mycallback(evt) {

log.trace('event', evt)

}

history.search('type=="udp-receive"', mycallback)

// history search with 'next' for iteration

var next = undefined

while (true) {

next = history.search('data.data.cmd==GTSTT && ts > date("2025-07-13T00:00:00Z")', mycallback, { limit: 5, next: next })

if (next == 'done') {

break

}

}

log.trace('done')

- history.one(params)

- params: The params object defines how to search for an event.

- myEndpoint: Boolean, set to true to search for events from the same endpoint as the endpoint in the event.

- type: Type of the event to search for.

- generic: Search for generic triggers with the key specified in the Generic field.

- ageMin: Search for an event at least 'ageMin' seconds old.

- ageMax: Search for an event at most 'ageMax' seconds old.

- query: Search for an event that matches the query strong.

- params: The params object defines how to search for an event.

- history.search(search, callback[, options])

- search: A valid search query that can be used in the Event Viewer.

- callback: A function that accepts one argument which is the event being returned.

- options: (optional) An object with options for the return.

- limit: Limit the number of events returned, default of 100.

- next: Input with a

nextstring returned from a previous invocation, useful to page through results. Note that when next is supplied, thesearchfield is ignored. When the last batch that matches the query is returned, next will be set to 'done' indicating that there are no more records.

¶ JSON function

Helper functions for dealing with JSON objects

// generate a JSON-Patch (http://jsonpatch.com/) document by comparing two objects

var patch = json.patchCreate(oldJson,newJson)

// apply a JSON-Patch to a JSON document

var newJson = json.patchApply(oldJson, patch)

¶ Log functions

Log custom messages to your account's system log. Log functions accept two parameters, the first is the log message, and the second is any variable that will be shown in the drop-down expander in the log viewer.

// log at trace level

log.trace('hello world')

// log at user level

log.user('hello world')

// log at warning level

log.warn('danger ahead')

// logging with a data object attached

log.trace('my data', event.data)

¶ Network functions

Various helper functions for networking

// network.isIpInCidrs(addr,cidrs)check if an address is within a group of CIDRs, useful for whitelisting IP addresses.

var isInside = network.isIpInCidrs('192.168.10.22',['192.168.10.0/24'])

- network.isIpInCidrs(addr, cidrs)

Returns true if the addr is inside the networks specified by cidrs.- addr: The IP address, or IP:Port to be checked.

- cidrs: An array if IP/bitmask to compare against.

¶ Queue functions

Functions for a simple FIFO queue.

- Backed by disk so the queue can be used for persistent storage (up to 1 month).

- Maximum number of entries is 65535, new items past the 65535th will drop the oldest record.

- Queues can also be used in

event mode, when enabled,queue-activityevents will be generated when items are put in the queue. Triggers that process these events must usetrigger.reply({})to acknowledge processing the event.

// put an item in the queue

queue.put('myqueue',{value:'mykey'})

// get an item from the queue (returns null if the queue is empty)

var obj = queue.get('myqueue')

if(obj) {

// queue had a record

} else {

// queue was empty

}

// flush all items off of the queue

queue.flush('myqueue')

// put an item on the queue and generate a "queue-activity" event.

queue.put('myqueue',{value:'mykey'}, {event: true})

¶ Random functions

Functions to generate random data.

// random.string(length) Generate a string of <length> bytes.

var str = random.string(10)

// random.hex(length) Generate a random hex string (0-9a-f) of <length> bytes.

var str = random.hex(8)

// random.integer(min,max) Generate a random integer in the range of [min, max).

var num = random.integer(10,15)

// random.double(min,max) Generate a random floating point number between [min, max).

var num = random.double(15.5,27.55)

¶ String functions

Functions that operate on strings

// parses a delimited string into an object

// sample: 3520091122891516,302,220,2d83,358070A,100,20200101033448

convert.delimitedStringToObject(event.data.payload,'key|net.mcc|net.mnc|net.lac|net.cellId|battery(int)|ts(timestamp,20060102150405)',',')

// sample output: { key: '3520091122891516', net: {mcc: '302', mnc: '220', lac: '2d83', cellId: '358070A', battery: 100, timestamp: '2020-01-01T03:34:48Z'}

- pattern keys are assumed to be strings unless overridden.

- first argument to a pattern element is the type,

int,float,string,bool,timestamp - timestamp has a second parameter for the format, following standard golang timestamp format.

¶ Trigger functions

Functions for manipulating triggers.

¶ Trigger libraries

You can set the event type of a trigger to Library, this makes it available to be included in other scripts. Common use cases:

- Common reusable functions

- Global configuration variables

// import a library tigger into another trigger

// assumes you have a library trigger with name 'mylibrary'

trigger.include('mylibrary')

¶ Reply to a synchronous event

Some trigger events expect a reply (for example http.get) to return content to the event generator, to reply you can use the trigger.reply() function.

// some events require or can optionally accept a reply from the trigger. NOTE that trigger.reply() stops execution of the trigger.

trigger.reply({reply data})

¶ Trigger wait/signal

Asynchronous triggers can wait for a signal (a unique string), and other triggers can signal the waiting trigger by calling trigger.signal(). A data object can be passed between the triggers enabling advanced synchronization tasks.

- Multiple calls to

signal()will only release thewait()one time. signal()can be called beforewait(), if called before thewait(), thewait()will simply "fall-through" without blocking.waitReset()can be called to clear any existing signals on a key before callingwait(). You should only callwaitReset()before you initiate the asynchronous request to ensure anysignal()calls do not get lost.- Keys should be considered with care, either specific to the endpoint or the particular transaction. If you can propogate transaction information to the asynchronous operation, consider using

random.string(8)or similar to generate a key with uniqueness for the operation. - A wait/signal transaction must complete within 1 hour, otherwise the tracking will be lost.

// signal a waiting trigger

trigger.signal('mysignal', {key: "value"})

// wait for a signal for a number of seconds, it returns an object

var sig = trigger.wait('mysignal', 5)

trigger.waitReset('mysignal')

¶ Trigger rate limiting

Users can rate limit certain operations to ensure that duplicates are not processed, or multiple commands aren't sent to a device at the same time. There are many use cases for rate limiting.

// check a rate limit against 'mykey' with a count of 1, a limit of 1, and a time period of 30 seconds. Returns false if rate limited.

// Effectively allows execution once every 30 seconds.

if (!trigger.ratelimit('mykey', 1,1, 30)) {

trigger.exit()

}

// Effectively allows execution 10 times per minute.

if (!trigger.ratelimit('mykey', 1,10, 60)) {

trigger.exit()

}

// Per-endpoint limiting example

if (!trigger.ratelimit(event.endpoint.key+'sms', 1,10, 60)) {

trigger.exit()

}

- trigger.ratelimit(key, count, limit, interval)

- key: The key to use for the limiter, can be a constant, or include a field like the endpoint ket for per-endpoint limiting.

- count: The number to increment the rate limiter for this execution, typically 1.

- limit: The limit for the rate limiter.

- interval: The time interval to enforce the limit.